Wikipedia FS allows you to treat MediaWiki articles like files on a Linux system. You can view the wiki text, and edit the wiki text, all using your standard editors and command-line tools.

Steps for setting up

If you want to have a quick play with Wikipedia FS, here are the series of steps I used today on an Ubuntu 6.04 system to get it installed from scratch; Most of these you can probably cut-and-paste directly onto a test machine, and should hopefully work on any modern Ubuntu or Debian system:

# Load the FUSE module:

modprobe fuse

# Install the FUSE libs:

apt-get install libfuse-dev libfuse2

# Get, build, and install the python-fuse bindings:

cd /tmp

mkdir python-fuse

cd python-fuse/

wget http://wikipediafs.sourceforge.net/python-fuse-2.5.tar.bz2

bunzip2 python-fuse-2.5.tar.bz2

tar xf python-fuse-2.5.tar

cd python-fuse-2.5

apt-get install python-dev

python setup.py build

python setup.py install

# Get, build, and install WikipediaFS

cd /tmp

mkdir wikipediafs

cd wikipediafs/

wget http://optusnet.dl.sourceforge.net/sourceforge/wikipediafs/wikipediafs-0.2.tar.gz

tar xfz wikipediafs-0.2.tar.gz

cd wikipediafs-0.2

python setup.py install

# Install the “fusermount” program (if not installed):

# First “nano /etc/apt/sources.list”, and uncomment the line to enable installing from universe, e.g. uncomment the line like this: “deb http://au.archive.ubuntu.com/ubuntu/ dapper universe”

apt-get update

apt-get install fuse-utils

# Mount something:

mkdir ~/wfs/

mount.wikipediafs ~/wfs/

# The above line will create a default configuration file. Edit this now:

nano ~/.wikipediafs/config.xml

# You may want to create a throwaway login on the Wikipedia for testing wikipediaFS now. This

# will allow you to edit articles. Or you can use your main account instead of a test account,

# it’s up to you – but I preferred to set up a separate test account. Either way, keep note of your

# account details & password for the <site> block below.

#

# Now, uncomment the example FR Wikipedia site, and change to the English site. For example, the <site> block should look something like this:

# — START —

<site>

<dirname>wikipedia-en</dirname>

<host>en.wikipedia.org</host>

<basename>/w/index.php</basename>

<username>Your-test-account-username</username>

<password>whatever-your-password-is</password>

</site>

# —– END —–

# Then unmount and remount the filesystem to ensure the above gets applied:

fusermount -u ~/wfs/

mount.wikipediafs ~/wfs/

Using it

# Can now use the filesystem. For example:

cat ~/wfs/wikipedia-en/Japan | less

# This should show the text of the Japan article.

# See what’s in the sandbox:

cat ~/wfs/wikipedia-en/Wikipedia:Sandbox

# Now edit the sandbox:

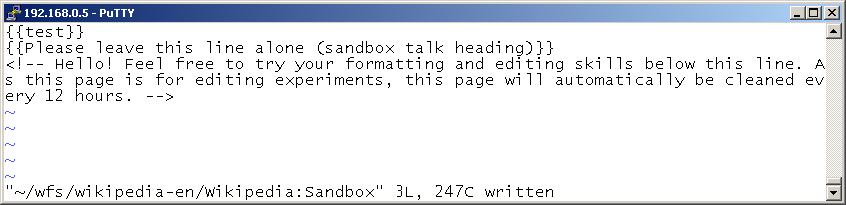

nano ~/wfs/wikipedia-en/Wikipedia:Sandbox

# Change some stuff, save, wait about 10 seconds for the save to complete, and (fingers crossed) see if the changes worked.

Probably not production ready

I have to emphasise here that WikipediaFS does seem a little flaky. Sometimes it works, and sometimes it doesn’t. In particular, I wanted to reset the Wikipedia’s sandbox after playing with it from this wiki text:

{{test}}

<!– Hello! Feel free to try your formatting and editing skills below this line. As this page is for editing experiments, this page will automatically be cleaned every 12 hours. –>

To this wiki text:

{{Please leave this line alone (sandbox talk heading)}}

<!– Hello! Feel free to try your formatting and editing skills below this line. As this page is for editing experiments, this page will automatically be cleaned every 12 hours. –>

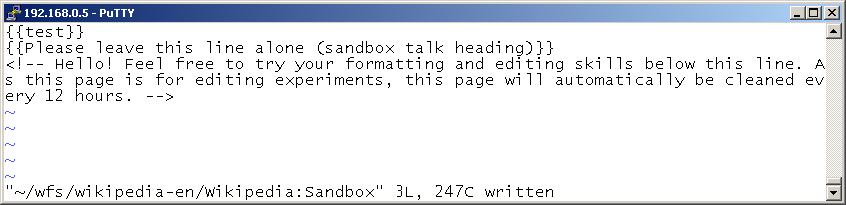

I.e. a one-line change. Simple, you say? … well, sorry, but you’d be wrong! In particular there seems to be something about “}}” and the character before it that caused my WikipediaFS installation to behave very strangely. It would look like an edit had saved successfully, but when you went back into editing the file, all the changes you made would be lost:

No indication that the above save has failed, but actually it has.

So, I tried breaking the above change down into smaller and smaller pieces, until the save worked. Here are the pieces that I could get to work:

- Adding “{{Please leave this line alone (sandbox talk heading)” (note no closing “}}”, because it wouldn’t save successfully with that included)

- Deleting “{{“

- Deleting “t”

- Deleting “es”

- Deleting the newline

- At that stage, I simply could not successfully delete the final “t”, no matter want I did. Eventually I gave up doing this with Wikipedia FS, and deleted the “t” using my normal account and web browser.

So, although WikipediaFS is fun to play with, and is certainly an interesting idea, I do have to caution you that WikipediaFS may sometimes exhibit dataloss behaviour, and so in its current form you might not want to use it as your main editing method.