If you’re compressing data for backups, you probably only care about 4 things:

- Integrity: The data that you get out must be the same as the data that you put in.

- Disk space used by the compressed file: The compressed file should be as small as possible.

- CPU or system time taken to compress the file: The compression should be as quick as possible.

- Memory usage whilst compressing the file.

Integrity is paramount (anything which fails this should be rejected outright). Memory usage is the least important, because any compression method that uses too much RAM will automatically be penalised for being slower (because swapping to disk is thousands of times slower than RAM).

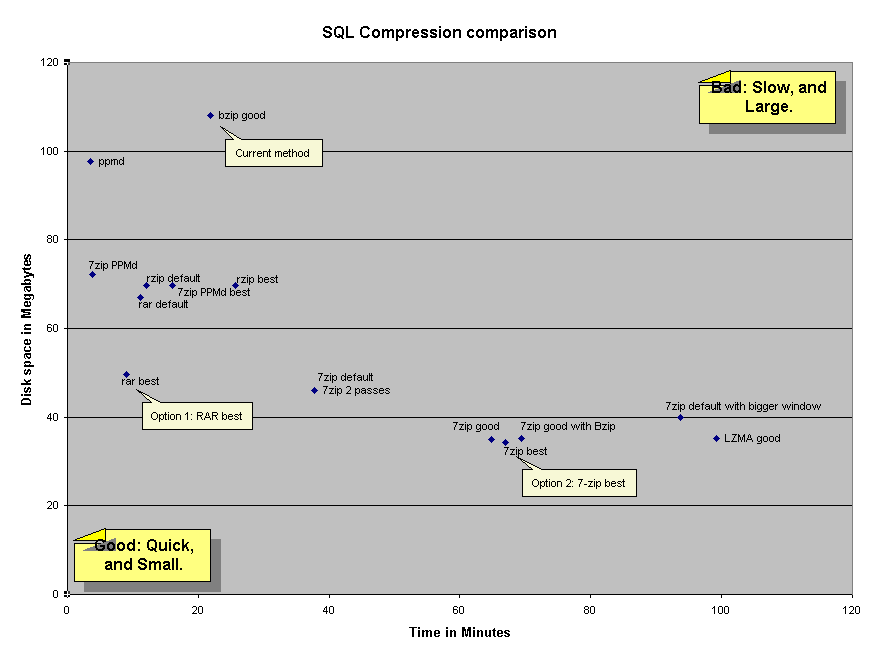

So essentially it comes down to a trade-off of disk space versus time taken to compress. I looked at how a variety of compression tools available on a Debian Linux system compared: Bzip2, 7-zip, PPMd, RAR, LZMA, rzip, zip, and Dact. My test data was the data I was interested in storing: SQL database dump text files, being stored for archival purposes, and in this case I used a 1 Gigabyte SQL dump file, which would be typical of the input.

The graph of compression results, comparing CPU time taken versus disk space used, is below:

Note: Dact performed very badly, taking 3.5 hours, and using as much disk space as Bzip2, so it has been omitted from the results.

Note: Zip performed badly – it was quick at 5 minutes, but at 160 Mb it used too much disk space, so it has been omitted from the results.

What the results tell me is that rather than using bzip2, either RAR’s maximum compression (for a quick compression that’s pretty space-efficient), or 7-zip’s maximum compression (for a slow compression that’s very space-efficient), are both good options for large text inputs like SQL dumps.